OpenShift 4.3 on RHOSP 13: Installation and Integrations

I would like to share my experience installing and integrating OpenShift with various OpenStack services, namely Cinder, Swift and Keystone (Neutron and Octavia TBD).

Prerequisites

- This was not a disconnected environment. Note that virtual machines have to be born with proper DNS configuration for OpenShift to download proper CoreOS images (

NeutronDhcpAgentDnsmasqDnsServerparameter from Director). - On Ceph RGW, the account in url option must be enabled (

rgw_swift_account_in_url: trueparameter from Director).

Installation

Following official documentation: https://access.redhat.com/documentation/en-us/openshift_container_platform/4.3/html-single/installing_on_openstack/index

Prepared a bastion server within the OpenShift project for convenience, downloaded into it the OpenStack credentials file and added the password into it.

[cloud-user@bastion ~]$ ./openshift-install create install-config --dir=installationDoc

? SSH Public Key /home/cloud-user/.ssh/id_rsa.pub

? Platform openstack

? Cloud openstack

? ExternalNetwork public

? APIFloatingIPAddress 192.168.226.42

? FlavorName openshift

? Base Domain openshift.com.br

? Cluster Name lab01

? Pull Secret [? for help]

The resulting configuration:

[cloud-user@bastion ~]$ cat installationDoc/install-config.yaml

apiVersion: v1

baseDomain: openshift.com.br

compute:

- hyperthreading: Enabled

name: worker

platform: {}

replicas: 1

controlPlane:

hyperthreading: Enabled

name: master

platform: {}

replicas: 1

metadata:

creationTimestamp: null

name: lab01

networking:

clusterNetwork:

- cidr: 10.128.0.0/14

hostPrefix: 23

machineCIDR: 10.0.0.0/16

networkType: OpenShiftSDN

serviceNetwork:

- 172.30.0.0/16

platform:

openstack:

cloud: openstack

computeFlavor: openshift

externalDNS: null

externalNetwork: public

lbFloatingIP: 192.168.226.42

octaviaSupport: "0"

region: ""

trunkSupport: "1"

publish: External

pullSecret: '{"auths":{"cloud.openshift.com":{"auth”:”abc==","email":"jlema@redhat.com"},"quay.io":{"auth”:”abc==","email":"jlema@redhat.com"},"registry.connect.redhat.com":{"auth”:”abc==”,”email":"jlema@redhat.com"},"registry.redhat.io":{"auth”:”abc==,”,”email":"jlema@redhat.com"}}}'

sshKey: |

ssh-rsa abc== cloud-user@bastion

As opposed to what the documentation says regarding the number of replicas (a positive integer greater than or equal to 3 for control nodes and greater to 2 for worker nodes), the installation actually works with 1 control node and 1 worker.

Proceed with the actual installation:

[cloud-user@bastion ~]$ ./openshift-install create cluster --dir=installationDoc --log-level=debug

First try we got this error:

...

INFO Creating infrastructure resources...

...

INFO Waiting up to 30m0s for the Kubernetes API at https://api.lab01.openshift.oss.timbrasil.com.br:6443...

ERROR Attempted to gather ClusterOperator status after installation failure: listing

INFO Pulling debug logs from the bootstrap machine

ERROR Attempted to gather debug logs after installation failure: failed to create SSH client, ensure the proper ssh key is in your keyring or specify with --key: dial tcp 192.168.226.40:22: connect: connection refused

FATAL Bootstrap failed to complete: waiting for Kubernetes API: context deadline exceeded

It was a MTU issue, as our environment has jumbo frames as its default. Actually, there is no way to set MTU in IPI installations, and it was decided to wait to a future feature where you will be able to create your networking in OpenStack and then instruct the installer to use the existing networking resources while still installing with the IPI workflow (something like bring your own network).

The (not very elegant) solution was to wait for the network creation, and manually configure the MTU, before the virtual machines are created:

[cloud-user@bastion ~]$ watch -n 10 openstack network list

[cloud-user@bastion ~]$ openstack network set --mtu 1400 lab01-n67mh-openshift

Another issue we found at this point it is that for some reason the openshift-installer does not use soft anti-affinity policies (different from AWS and from OpenStack UPI installation), and may create all the masters/workers in the same server. The only option to accomplish this would be to define different regions for the nodes, which is not supported from RHOSP side.

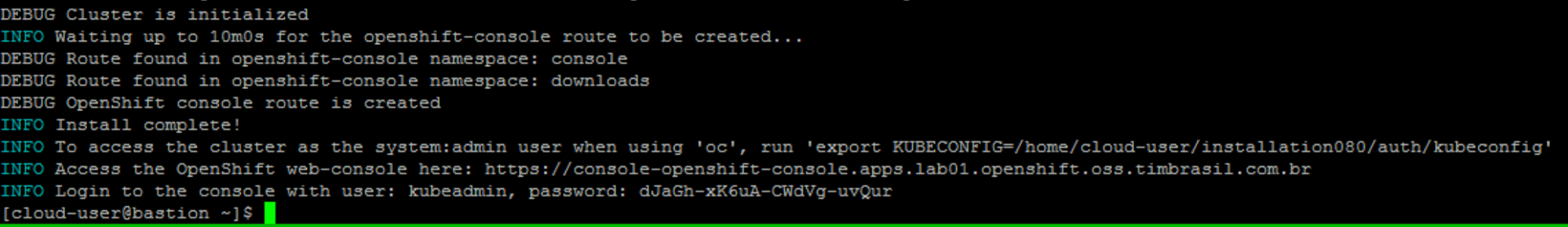

Finally, installation completed:

Cinder Integration

Worked out of the box:

[cloud-user@bastion ~]$ oc get storageClass

NAME TYPE

standard (default) kubernetes.io/cinder

[cloud-user@bastion ~]$ oc describe storageClass standard

Name: standard

IsDefaultClass: Yes

Annotations: storageclass.kubernetes.io/is-default-class=true

Provisioner: kubernetes.io/cinder

Parameters: <none>

Events: <none>

[cloud-user@bastion ~]$ oc get -o yaml storageClass standard

allowVolumeExpansion: true

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

annotations:

storageclass.kubernetes.io/is-default-class: "true"

creationTimestamp: 2020-03-24T18:17:11Z

name: standard

ownerReferences:

- apiVersion: v1

kind: clusteroperator

name: storage

uid: 82643882-1e38-4fdd-98c2-fb19636ad003

resourceVersion: "10307"

selfLink: /apis/storage.k8s.io/v1/storageclasses/standard

uid: 7bb9919c-2ea7-4f1d-aedf-111b6c16e68c

provisioner: kubernetes.io/cinder

reclaimPolicy: Delete

volumeBindingMode: WaitForFirstConsumer

Create a persistent volume claim from this template:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: vol-teste-vai

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: standard

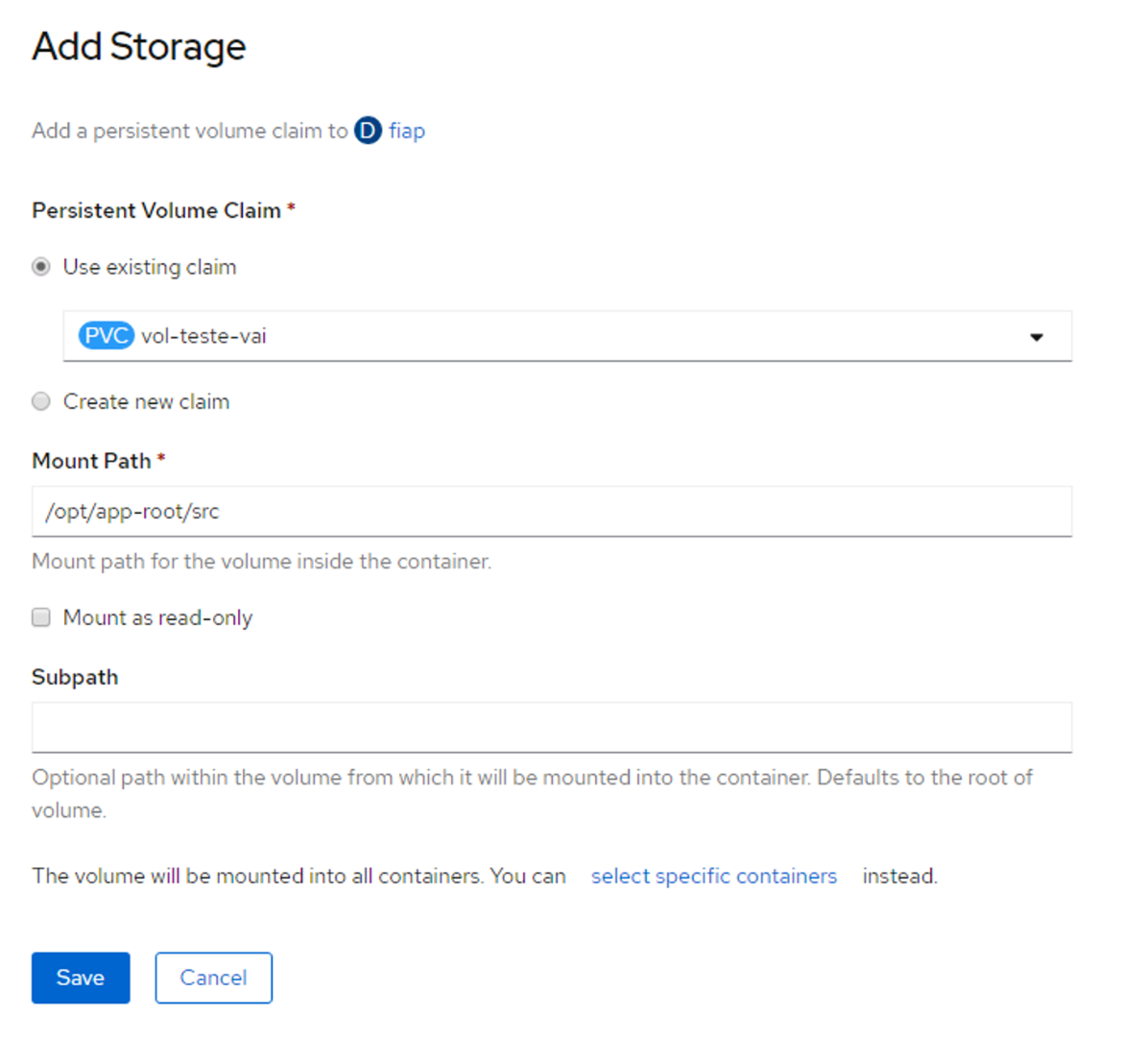

As the volumeBindingMode is set to WaitForFirstConsumer, we had to actually attach the PV to a deployment in order to the Cinder volume to be created. For that, we used OpenShift GUI:

From OpenShift side:

[cloud-user@bastion ~]$ oc get pv

NAME CAPACITY ACCESSMODES RECLAIMPOLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-691a2bbb-0d5a-4d2f-94e2-f50708085c7d 10Gi RWO Delete Bound default/vol-teste-vai standard 55s

From OpenStack side, the actual Cinder volume created dynamically by OpenShift:

(overcloudrc) [cloud-user@bastion ~]$ openstack volume list

+--------------------------------------+--------------------------------------------------------------+-----------+------+-------------+

| ID | Name | Status | Size | Attached to |

+--------------------------------------+--------------------------------------------------------------+-----------+------+-------------+

| b75c57b4-ea8f-4d73-b0d6-28ab02458842 | lab01-9v54g-dynamic-pvc-691a2bbb-0d5a-4d2f-94e2-f50708085c7d | available | 10 | |

+--------------------------------------+--------------------------------------------------------------+-----------+------+-------------+

Swift Integration

Worked out of the box.

From OpenStack side:

(overcloud) [stack@manager ~]$ openstack container list

+----------------------------------------------------------------+

| Name |

+----------------------------------------------------------------+

| lab01-9v54g-image-registry-phbhqxrotxhvfkunvehpebfyeyajvoiirer |

+----------------------------------------------------------------+

We can see the docker registry images inside the container:

(overcloud) [stack@manager ~]$ swift list lab01-9v54g-image-registry-phbhqxrotxhvfkunvehpebfyeyajvoiirer | grep registry

files/docker/registry/v2/blobs/sha256/02/02c05272c8dd4f99d68e12066db506412bef3c460dbb4a587a7cafc8af593e35/data

files/docker/registry/v2/blobs/sha256/45/455ea8ab06218495bbbcb14b750a0d644897b24f8c5dcf9e8698e27882583412/data

files/docker/registry/v2/blobs/sha256/46/46fc24a071a44b29a3ba49c94f75a47514a56470d539c9204f3e7688973fc93a/data

files/docker/registry/v2/blobs/sha256/4a/4abfcf58ff10724f3886be18423f80a481e90c4b258fbecad6ea06917a266003/data

files/docker/registry/v2/blobs/sha256/56/566b3d0a5bcd5903e86174ab1551a402b1c0dade2aa918d86895516bd2d0dd43/data

files/docker/registry/v2/blobs/sha256/6d/6d3329d5faa944944d54166b52dd7d11fcb99e6467950b5679095a44346c1cc8/data

files/docker/registry/v2/blobs/sha256/81/8170b35922ece1799b004d6fe41e253808d47da894197d3c7fe2b49614336fa7/data

files/docker/registry/v2/blobs/sha256/84/84e620d0abe585d05a7bed55144af0bc5efe083aed05eac1e88922034ddf1ed2/data

files/docker/registry/v2/blobs/sha256/b5/b57725894ce17afd25fefcef7fdb2467837e85834bf482759b9ca67b261a3724/data

files/docker/registry/v2/blobs/sha256/bb/bb13d92caffa705f32b8a7f9f661e07ddede310c6ccfa78fb53a49539740e29b/data

files/docker/registry/v2/repositories/openshift/python/_layers/sha256/455ea8ab06218495bbbcb14b750a0d644897b24f8c5dcf9e8698e27882583412/link

files/docker/registry/v2/repositories/openshift/python/_layers/sha256/46fc24a071a44b29a3ba49c94f75a47514a56470d539c9204f3e7688973fc93a/link

files/docker/registry/v2/repositories/openshift/python/_layers/sha256/4abfcf58ff10724f3886be18423f80a481e90c4b258fbecad6ea06917a266003/link

files/docker/registry/v2/repositories/openshift/python/_layers/sha256/8170b35922ece1799b004d6fe41e253808d47da894197d3c7fe2b49614336fa7/link

files/docker/registry/v2/repositories/openshift/python/_layers/sha256/84e620d0abe585d05a7bed55144af0bc5efe083aed05eac1e88922034ddf1ed2/link

files/docker/registry/v2/repositories/openshift/python/_layers/sha256/bb13d92caffa705f32b8a7f9f661e07ddede310c6ccfa78fb53a49539740e29b/link

files/docker/registry/v2/repositories/openshift/python/_manifests/revisions/sha256/566b3d0a5bcd5903e86174ab1551a402b1c0dade2aa918d86895516bd2d0dd4/link

Keystone Integration

Tricky when using self-signed certificates from OpenStack side. Openshift Keystone Identity Provider only works with https keystone endpoints.

From the other side, there are several issues for deploying OpenShift on top endpoints with self-signed certificates:

- [OSP] allow retrieval of ignition files from behind an encrypted endpoint which uses a self-signed certificate / target release 4.4.0

- [IPI][OSP] Machine-api cannot create workers on osp envs installed with self-signed certs / target release 4.4.0

- [OSP] allow retrieval of ignition files from behind an encrypted endpoint which uses a self-signed certificate / target release 4.3.z

- [IPI][OSP] Machine-api cannot create workers on osp envs installed with self-signed certs / target release 4.3.z

TBD

Neutron/Octavia Integration (Kuryr)

TBD

Comments